The 3D Production Pipeline

By Evan Long

Understanding the stages in a 3D production pipeline is essential for any 3D animator. Whilst ideas are a dime a dozen, knowing how to work your idea into reality is the cornerstone of being an effective animator. Here we will take you through each stage of a 3D production pipeline:

1. Pre-production2. 3D Modelling3. UV Mapping4. Texturing and Shaders5. Rigging6. Animation

1. Pre-production

2. 3D Modelling

3. UV Mapping

4. Texturing and Shaders

5. Rigging

6. Animation

1. Pre-production

2. 3D Modelling

3. UV Mapping

4. Texturing and Shaders

5. Rigging

6. Animation

Let’s get started!

Got a great idea for a 3D character or animation? Well that’s the first step in the pre-production process. It’s here in pre-production where your big idea is fleshed out into a story with a plot and characters. Be prepared though, judgements will be passed!

If your idea or story passes the muster, than the next step will be concept design. Discussions about concept design may include things like the style, look, sets and characters. From herestoryboards (frames depicting the story and concept design) are developed to further shape the scenes and show how the animation may look in production.

The following video shows an animatic (animated storyboards) from a deleted scene in Big Hero 6 .

Animatic Storyboard (Walt Disney Animation Studio, 2015)

From the storyboards, more detailed concept art and reels with audio and dialogue may be developed.

Figure 1: Concept art created for a Hiro-Tadashi moment based from an early storyboard idea by Armand Sarrano

Figure 1: Concept art created for a Hiro-Tadashi moment based from an early storyboard idea by Armand Sarrano

If you’ve made it this far, you’ll now be looking at turning your concept designs into 3D models ready to be textured, rigged and animated where necessary. Nowadays animators have a range of powerful software applications to assist with the modelling process. Autodesk have developed a suite of industry standard applications like 3DS Max, MAYA and Mudbox to give animators the tools to make your design concepts a reality in a 3 dimensional space.

“Modeling is the process of taking a shape and molding it into a completed 3D mesh. The most typical means of creating a 3D model is to take a simple object, called a primitive, and extend or "grow" it into a shape that can be refined and detailed. Primitives can be anything from a single point (called a vertex), a two-dimensional line (an edge), a curve (a spline: Also referred to as NURBS - Non Uniform Rational B-Splines), to three dimensional objects (faces or polygons) (www.animationarena.com).

Figure 2: The process of building a 3D mesh (forums.autodesk.com)

For photo realistic 3D models, photographic references imported into image planes enables 3D artists to accurately model the object.

Figure 2: The process of building a 3D mesh (forums.autodesk.com)

For photo realistic 3D models, photographic references imported into image planes enables 3D artists to accurately model the object.

Figure 2a: An example of photographic references imported as Image planes (Image Source: www.digitaltutors.com)

Figure 2a: An example of photographic references imported as Image planes (Image Source: www.digitaltutors.com)

Figure 3: A human head mesh from www.digitaltutors.com

There are many great online tutorials around to support 3D animators and modellers (Digital Tutors and YouTube to name a few) in developing their skills as artists.

This YouTube video demonstrates how to create a low poly 3D model of a human.

It is important that the topology of your 3D mesh is clean and contains only the necessary detail.

Typically 3D models developed for games have lower poly counts than models developed for movies as games must be rendered in real time in front of the player and are dependent on the rendering capacity of the game engine. Movies on the other hand are pre-rendered and time is the only hurdle.

For more information on the polycount differences between games and movies visit:

Figure 3: A human head mesh from www.digitaltutors.com

There are many great online tutorials around to support 3D animators and modellers (Digital Tutors and YouTube to name a few) in developing their skills as artists.

This YouTube video demonstrates how to create a low poly 3D model of a human.

It is important that the topology of your 3D mesh is clean and contains only the necessary detail.

Typically 3D models developed for games have lower poly counts than models developed for movies as games must be rendered in real time in front of the player and are dependent on the rendering capacity of the game engine. Movies on the other hand are pre-rendered and time is the only hurdle.

For more information on the polycount differences between games and movies visit:

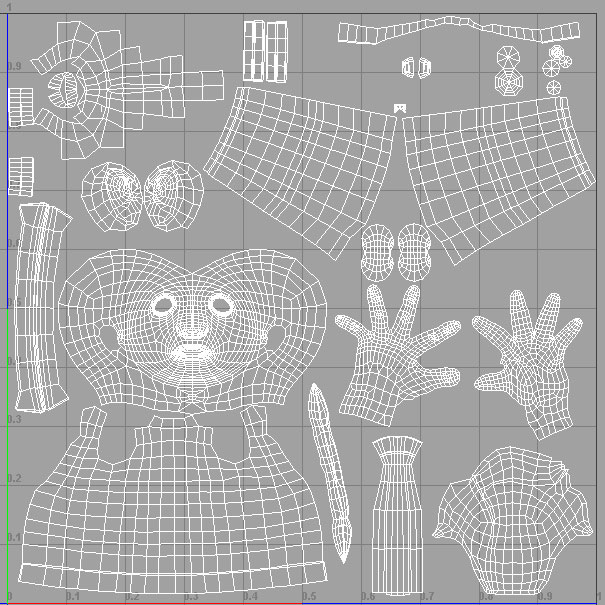

Now you’ve got a great 3D model, but as it is now, it’s just a mesh without textures, colours and shading….put simply, it’s lifeless! But before we can add our shaders and textures, we need to cut up our 3D mesh by creating seams and flatten it out into a 2 dimensional image map (usually saved as a .PNG or JPG) so we can paint and texture it. This is the process of UV Mapping.

Figure 4: An example of a 2 dimensional UV map (cgi.tutsplus.com). This UV map shows the UV layout where each part of the mesh can be individually edited or textured as UV Shells.

Figure 4: An example of a 2 dimensional UV map (cgi.tutsplus.com). This UV map shows the UV layout where each part of the mesh can be individually edited or textured as UV Shells. Figure 4a: An example of a treated 2 dimensional UV map ready to be applied to the model (http://forum.unity3d.com/).

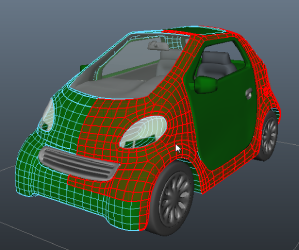

In Maya 2016, the UV shells are highlighted red when the mouse hovers over.

*It’s important that UV maps do not contain overlapping edges or vertices as this will affect the appearance of the treated map once it is applied to the model. By editing the UV shells in the UV editor you can avoid overlaps. Time taken to ensure a good layout on your UV map will be time saved trying to fix errors in your map later.

Figure 4a: An example of a treated 2 dimensional UV map ready to be applied to the model (http://forum.unity3d.com/).

In Maya 2016, the UV shells are highlighted red when the mouse hovers over.

*It’s important that UV maps do not contain overlapping edges or vertices as this will affect the appearance of the treated map once it is applied to the model. By editing the UV shells in the UV editor you can avoid overlaps. Time taken to ensure a good layout on your UV map will be time saved trying to fix errors in your map later.

Figure 5: The UV shell is highlighted red (knowledge.autodesk.com)

Figure 5: The UV shell is highlighted red (knowledge.autodesk.com)

Now you’ve created a UV map for your model, it’s time to apply colours and textures. This is the part of the modelling process that

Figure 6: A textured 3D model from www.creativebloq.com

Software applications like ZBrush and Adobe Photoshop may be used to apply textures to your 3D model and UV image maps, while Autodesk’s Maya also has some shaders that can be applied directly to your UV shells and 3D model.

In Maya, the Hypershade tool enables the user to select a variety of shaders to apply to individual UV shells or the entire model. Each shader has it own characteristics that make it suitable dependent on the type of surface to be textured. For matte finishes or low reflectivity, the Lambert shader may be a suitable choice, while for surfaces that require greater reflectivity and specularity Blinn or Phong shaders may be more suitable. All shaders come with their own set of control parameters that allow the user to manipulate each attribute of the shader enabling incredible control over the appearance of each shader in response to the lighting and environment of your 3D scene.

For further options in creating detailed texturing, the UV maps created earlier can be imported into programs like Adobe Photoshop and painted onto. The painted UV maps can then be imported back into the 3D software and applied over the shaders onto the model.

The following video tutorials Antony Ward (2014) from www.creativebloq.com are an extensive resource for learning how to apply the aformentioned techniques as well as apply sophisticated textures and manipulate shaders to produce highly effective results for your 3D model.

The first of 14 videos is shown here:

Other topics covered in these video tutorials important in refining the appearance of your 3D model include:

Figure 6: A textured 3D model from www.creativebloq.com

Software applications like ZBrush and Adobe Photoshop may be used to apply textures to your 3D model and UV image maps, while Autodesk’s Maya also has some shaders that can be applied directly to your UV shells and 3D model.

In Maya, the Hypershade tool enables the user to select a variety of shaders to apply to individual UV shells or the entire model. Each shader has it own characteristics that make it suitable dependent on the type of surface to be textured. For matte finishes or low reflectivity, the Lambert shader may be a suitable choice, while for surfaces that require greater reflectivity and specularity Blinn or Phong shaders may be more suitable. All shaders come with their own set of control parameters that allow the user to manipulate each attribute of the shader enabling incredible control over the appearance of each shader in response to the lighting and environment of your 3D scene.

For further options in creating detailed texturing, the UV maps created earlier can be imported into programs like Adobe Photoshop and painted onto. The painted UV maps can then be imported back into the 3D software and applied over the shaders onto the model.

The following video tutorials Antony Ward (2014) from www.creativebloq.com are an extensive resource for learning how to apply the aformentioned techniques as well as apply sophisticated textures and manipulate shaders to produce highly effective results for your 3D model.

The first of 14 videos is shown here:

Other topics covered in these video tutorials important in refining the appearance of your 3D model include:

-

Planar projections https://www.youtube.com/embed/LG0EvddoKfk

-

Unfolding UVs https://www.youtube.com/embed/GOyZtHD5cks

-

-

Baking Maps https://www.youtube.com/embed/0uSRbNPrv0U

-

Occlusion Map https://www.youtube.com/embed/YS6RkiIb6Nc

-

Applying Colours https://www.youtube.com/embed/VQTewce2cSo

-

Duplicating and Diffusing https://www.youtube.com/embed/4ia5i5Z4CyQ

-

Texture Pages https://www.youtube.com/embed/nH8TnL-AUO8

-

Adding Textures https://www.youtube.com/embed/BpkvdTkIlTs

-

Blending Modes https://www.youtube.com/embed/F8L3MKeYbJ4

-

Texture Painting with a Pen https://www.youtube.com/embed/aqwrgoNWLCw

-

Generating Maps

-

Specular Maps https://www.youtube.com/embed/8754C98gcIA

Planar projections https://www.youtube.com/embed/LG0EvddoKfk

Unfolding UVs https://www.youtube.com/embed/GOyZtHD5cks

Baking Maps https://www.youtube.com/embed/0uSRbNPrv0U

Occlusion Map https://www.youtube.com/embed/YS6RkiIb6Nc

Applying Colours https://www.youtube.com/embed/VQTewce2cSo

Duplicating and Diffusing https://www.youtube.com/embed/4ia5i5Z4CyQ

Texture Pages https://www.youtube.com/embed/nH8TnL-AUO8

Adding Textures https://www.youtube.com/embed/BpkvdTkIlTs

Blending Modes https://www.youtube.com/embed/F8L3MKeYbJ4

Texture Painting with a Pen https://www.youtube.com/embed/aqwrgoNWLCw

Generating Maps

Specular Maps https://www.youtube.com/embed/8754C98gcIA

So up to now you've managed to create a great looking 3D model with textures and shaders, but it doesn't move! Before the model can be animated, we need to apply a 'digital skeleton' (a system of joints and control handles) to the model for the animators to manipulate. This is the final process before the model is animated. This is called rigging.

To rig a 3D model, a skeleton is placed on and bound to the model just as a real skeleton exists in a living animal. For a rig to function correctly, the joints must be placed in a logical joint hierarchy. The first joint placed is called the root joint and all other joints and bones must be connected to the root joint. For example, when rigging an arm the root joint would be the shoulder joint, connected subsequently by the elbow, wrist and fingers.

Figure 7: A skeletal rig demonstrating joint hierarchy.

Two important concepts when discussing rigging are Forward Kinematics (FK) and Inverse Kinematics (IK).

Figure 7: A skeletal rig demonstrating joint hierarchy.

Two important concepts when discussing rigging are Forward Kinematics (FK) and Inverse Kinematics (IK).

-

Forward Kinematics (FK) is a term to define how each joint in the skeleton can only affect the joints below it in the joint hierarchy.

-

Inverse Kinematics (IK). "IK rigging is the reverse process from forward kinematics, and is often used an efficient solution for rigging a character's arms and legs. With an IK rig, the terminating joint is directly placed by the animator, while the joints above it on the hierarchy are automatically interpolated by the software." (Slick, J.,2015).

To assist animators in applying realistic animated movement sequences to 3D models, degrees of freedom or constraints can be applied to the joints. Constraints are used to limit the amount of movement each joint can perform. This enables animators to accurately mimic movements observed in nature.

Facial rigging is a separate and complex process that requires a more technical rig than a traditional joint structure.

Forward Kinematics (FK) is a term to define how each joint in the skeleton can only affect the joints below it in the joint hierarchy.

Inverse Kinematics (IK). "IK rigging is the reverse process from forward kinematics, and is often used an efficient solution for rigging a character's arms and legs. With an IK rig, the terminating joint is directly placed by the animator, while the joints above it on the hierarchy are automatically interpolated by the software." (Slick, J.,2015).

Animating a rigged 3D model is possibly the most challenging and most difficult skills to master in the 3D production pipeline. This is why many companies will employ specialist animators to perform this part of the production process. Producing realistic and entertaining animation requires a high level of observational and technical skill.

Basically there are two main types of animation for 3D projects:

1. Keyframe animation

Keyframe animation is the process of editing/animating individual frames on a timeline and using a motion tween to create smooth transition from frame to frame. The length of animation is usually expressed in frames per second (fps). For example if the project is set at 24 fps than there will be 24 key frames to be edited and displayed for every second of the animation.

2. Motion Capture

"Motion capture, or mocap, was first used sparingly due to the limitations of the technology, but is seeing increased acceptance in everything from video game animation to CG effects in movies as the technique matures. Whereas keyframing is a precise, but slow animation method, motion capture offers an immediacy not found in traditional animation techniques. Mocap subjects, usually actors, are placed in a special suit containing sensors that record the motion of their limbs as they move. The data is then linked to the rig of a 3D character and translated into animation by the 3D software" (www.animationarena.com).

Last week we looked at UV mapping, texturing and shaders rigging and animation. This week we'll be looking at the fundamentals of :

7. Lighting

8. Rendering

9. Compositing

So far we've looked at everything a 3D artist needs to do to model, texture, rig and animate a 3D scene. It looks good, but it's not quite ready for the big screen just yet. So what now? One of the most important factors in getting your scene to look and feel real is lighting.

When applying lighting, the 3D artist must consider many variables including whether the scene will be set in an interior or exterior environment.

Generally the following lighting options exist in most 3D software packages to assist animators create effective lighting:

Point light casts illumination outwards from every direction from a single small point in 3 dimensional space. This type of light can be useful for things like simulating light bulbs, strip lighting or fairy lights.

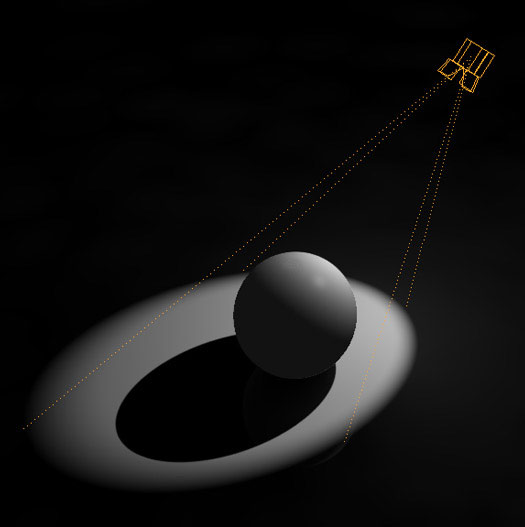

Figure 1: An example of how point light can illuminate an object. (https://support.solidangle.com/display/AFCUG/Point+Light)

Figure 1: An example of how point light can illuminate an object. (https://support.solidangle.com/display/AFCUG/Point+Light)

Directional lights cast parallel light rays in a single direction, as the sun does (for all practical purposes) at the surface of the earth. Directional lights are primarily used to simulate sunlight. You can adjust the color of the light and position and rotate the light in 3D space. (Knowledge.autodesk.com).

Figure 2: This illustration demonstrates how directional light may be used to light a 3D scene. (http://blog.digitaltutors.com/understanding-different-light-types/)

Figure 2: This illustration demonstrates how directional light may be used to light a 3D scene. (http://blog.digitaltutors.com/understanding-different-light-types/)

Just as the name implies, a spot light functions as you would expect in a real life spot light. It directs the source of light at the object to be lit. Some effective uses of spot lights may include things like torches, lamps

Figure 3: An example of how a spot light can be used to cast directional light onto an object. https://flippednormals.com/wp-content/uploads/2013/11/intro3D_lightSpot.jpg

Figure 3: An example of how a spot light can be used to cast directional light onto an object. https://flippednormals.com/wp-content/uploads/2013/11/intro3D_lightSpot.jpg

An area light is a light that casts directional light rays from within a set boundary, either a rectangle or circle. This type of light is perfect for recreating florescent lights or something that is rectangular (Masters, 2013).

Figure 4: Area lights applied to a 3D scene (http://blog.digitaltutors.com/understanding-different-light-types/)

Figure 4: Area lights applied to a 3D scene (http://blog.digitaltutors.com/understanding-different-light-types/)

With default settings, it's almost identical to a point light, emitting omnidirectional rays from a central point. However unlike a point light, a volumetric light has a specific shape and size, both of which affect its falloff pattern. A volumetric light can be set in the shape of any geometric primitive (cube, sphere, cylinder, etc.), and its light will only illuminate surfaces within that volume (Slick, 2015).

Figure 5: Volume light applied to an object (http://tech316.blogspot.com.au/2007/09/maya-light-types.html)

Figure 5: Volume light applied to an object (http://tech316.blogspot.com.au/2007/09/maya-light-types.html)

Ambient light is a light that strikes an object from every direction. The ambient shade attribute controls how the light strike the surface of an object (Tech316.blogspot.com, 2007).

Figure 6: Ambient lighting casts light in every direction. (http://blog.digitaltutors.com/understanding-different-light-types/)

Figure 6: Ambient lighting casts light in every direction. (http://blog.digitaltutors.com/understanding-different-light-types/)

Wow! Doesn't thoughtful, strategic lighting make a difference to the overall look of a 3D scene. So here we are at one of the final steps in the 3D production pipeline. We now need to render our scene ready for the world to see.

Rendering your 3d model /animation into an image or video file is such a crucial part in the 3D production process that there are dedicated plug-ins that have been developed to assist in the process. Effective rendering can be heavily influenced by the processing power of the computer and time. Depending on the complexity of the project, rendering can take minutes to days.

In Maya, Mental ray is an example of a plugin that can produce effective results, however this plug-in does delay render times and places an additional load on computer processors and graphics engines.

Figure 7: A scene rendered using Maya's Mental Ray (http://www.lugher3d.com/forum/post-and-view-finished-work/3d-stills/first-interior-render-in-mental-ray-using-maya)

The following information from www.braindistrict.com provides technical descriptions of the three techniques commonly used for rendering

1. Ray tracing

Ray tracing is a technique for generating an image by tracing the path of light through pixels in an image plane and simulating the effects of its encounters with virtual objects. The technique is capable of producing a very high degree of visual realism, usually higher than that of typical scanline rendering methods, but at a greater computational cost. This makes ray tracing best suited for applications where the image can be rendered slowly ahead of time, such as in still images and film and television visual effects, and more poorly suited for real-time applications like video games where speed is critical.

2. Radiosity

Radiosity is one of the most photorealistic rendering engines available on the market, which specializes in treating each object separately, and considers each surface a potential source of light. This will make the lights from a wall, reflect on the object and bounce off of it.

3. Scanline

Scanline rendering is used when speed is a necessity, which makes it the preferred technique for real-time rendering. It works on a row-by-row basis rather than a pixel-by-pixel or polygon-by-polygon basis. The main advantage of this method is that sorting vertices along the normal of the scanning plane reduces the number of comparisons between edges.

Figure 7: A scene rendered using Maya's Mental Ray (http://www.lugher3d.com/forum/post-and-view-finished-work/3d-stills/first-interior-render-in-mental-ray-using-maya)

The following information from www.braindistrict.com provides technical descriptions of the three techniques commonly used for rendering

1. Ray tracing

Ray tracing is a technique for generating an image by tracing the path of light through pixels in an image plane and simulating the effects of its encounters with virtual objects. The technique is capable of producing a very high degree of visual realism, usually higher than that of typical scanline rendering methods, but at a greater computational cost. This makes ray tracing best suited for applications where the image can be rendered slowly ahead of time, such as in still images and film and television visual effects, and more poorly suited for real-time applications like video games where speed is critical.

2. Radiosity

Radiosity is one of the most photorealistic rendering engines available on the market, which specializes in treating each object separately, and considers each surface a potential source of light. This will make the lights from a wall, reflect on the object and bounce off of it.

3. Scanline

Scanline rendering is used when speed is a necessity, which makes it the preferred technique for real-time rendering. It works on a row-by-row basis rather than a pixel-by-pixel or polygon-by-polygon basis. The main advantage of this method is that sorting vertices along the normal of the scanning plane reduces the number of comparisons between edges.

The process of 3D compositing typically involves multiple input files, including still images and video files, which are assembled and layered during the 3D compositing process. Effects can be more easily applied to a scene or still image through 3D compositing, such as having objects in one layer affect lighting in another. This type of compositing is also typically used to create more realistic images generated in 3Dgraphics software without overtaxing rendering computers, by rendering multiple passes for a scene or object that are then composited together for a final image (http://www.wisegeek.com).

Figures 7-9: Compositing 3D images and scenes.

Figures 7-9: Compositing 3D images and scenes.

1. Solidangle.com (2015). Point light [Image]. solidangle.com. Retrieved from https://support.solidangle.com/display/AFCUG/Point+Light

2. Masters, M (2013). Understanding Different Light Types. [Web log post]. Retrieved from http://blog.digitaltutors.com/understanding-different-light-types/

3. Flippednormals.com (2015).Introduction to 3D Lighting. [Image file]. Retrieved from https://flippednormals.com/wp-content/uploads/2013/11/intro3D_lightSpot.jpg

4. Tech316.blogspot.com (2007). Digital Lighting and Rendering in Maya. [Web log post]. Retrieved from http://tech316.blogspot.com.au/2007/09/maya-light-types.html

5. Tech316.blogspot.com (2007). Digital Lighting and Rendering in Maya. [Image file]. Retrieved from http://tech316.blogspot.com.au/2007/09/maya-light-types.html

6. Junior Boarder (2009). Interior render using Mental Ray in Maya [image]. Lugher3D.com/forum. Retrieved from. https://support.solidangle.com/display/AFCUG/Point+Light

7. Lopez, R (2014, January 27). What is 3D Rendering? [Web log post]. http://www.braindistrict.com/posts/what-is-3d-rendering?locale=en

8. Desowitz, B (2009). Bringing Benjamin Button to Life. [Image file] Retrieved from http://www.awn.com/vfxworld/bringing-benjamin-button-life

With the ever-increasing availability of high-end, user friendly 3D development software, 3D graphics can be seen just about anywhere. From advertising content to phones, games TV and movies, 3D graphics have come a long way since first emerging during the early 1970's.

It was at the University of Utah during the early 70's when the first examples of shaded 3D CGI were produced for display on TV monitors by pioneers like Jim Blinn. Around the same time, founders of Pixar Ed Catmull and Fred Parke also began creating 3D animated shorts like A Computer Animated Hand. However in it's infancy, CG was only possible by programming from scratch.

In 1975, to help realise his vision for Star Wars, George Lucas inspired a new wave of 3D special effects with the birth of Industrial Light and Magic (ILM) . It was here where the first"extensive use of 3D computer generated imagery and animation ever seen in a feature film during a very basic, untextured wireframe sequence of the Death Star trench."(Mori, 2015).

Figure 1: A scene from Star Wars demonstrating the first use of CGI in film.

Only a few years later in 1982, CGI generated sequences were heavily used in both Star Trek II and Disney's Tron. The success of these movies and the lowering costs of computer hardware opened the door for the development of CGI based software companies including Autodesk, Alias Research, Wavefront and Omnibus, who went on to dominate the industry by 1986. It was also in 1986 where several other innovations were introduced including GIF, JPEG and TIFF formats, as well as Adobe Illustrator.

Figure 1: A scene from Star Wars demonstrating the first use of CGI in film.

Only a few years later in 1982, CGI generated sequences were heavily used in both Star Trek II and Disney's Tron. The success of these movies and the lowering costs of computer hardware opened the door for the development of CGI based software companies including Autodesk, Alias Research, Wavefront and Omnibus, who went on to dominate the industry by 1986. It was also in 1986 where several other innovations were introduced including GIF, JPEG and TIFF formats, as well as Adobe Illustrator.

Figure 2: A scene from Disney's Tron.

In 1987 after Omnibus went bankrupt, Kim Davidson and Greg Hermanovic started Side Effects, which was based on the Production of Realistic Image Scene Mathematical Simulation, or PRISMS. By the 1990's PRISIMS was used to create visual effects for hit films including Apollo 13, Twister and Titanic.

Around the same time in 1988, aiming to convince MAXON Computer of their newly written software’s potential, the Losch brothers entered it into a monthly contest run by MAXON‘s Kickstart magazine and promptly won the competition. Incredibly, they had just developed the very beginnings of what would become Cinema 4D. (Mori, 2015).

With the advancement of Alias and PowerAnimator software in the mid-90's, Chris Landreth went on to develop beta versions of Maya. It wasn't until 1998 when the first version of Maya was released under a new Alias/Wavefront company which continued to develop the software until Autodesk absorbed the company in 2006 and continues to release updates today.

Figure 2: A scene from Disney's Tron.

In 1987 after Omnibus went bankrupt, Kim Davidson and Greg Hermanovic started Side Effects, which was based on the Production of Realistic Image Scene Mathematical Simulation, or PRISMS. By the 1990's PRISIMS was used to create visual effects for hit films including Apollo 13, Twister and Titanic.

Around the same time in 1988, aiming to convince MAXON Computer of their newly written software’s potential, the Losch brothers entered it into a monthly contest run by MAXON‘s Kickstart magazine and promptly won the competition. Incredibly, they had just developed the very beginnings of what would become Cinema 4D. (Mori, 2015).

With the advancement of Alias and PowerAnimator software in the mid-90's, Chris Landreth went on to develop beta versions of Maya. It wasn't until 1998 when the first version of Maya was released under a new Alias/Wavefront company which continued to develop the software until Autodesk absorbed the company in 2006 and continues to release updates today.

John Lasseter is an American animator, film director, screenwriter, producer and the chief creative officer of Pixar, Walt Disney Animation Studios, and DisneyToon Studios. He is also the Principal Creative Advisor for Walt Disney Imagineering. (Wikipedia, 2015).

John has contributed to and worked on some of my favourite animated films including Toy Story and Monsters Inc.

Figure 3: John Lasseter discussing Toy Story.

Lasseter is a founding member of Pixar, where he oversees all of Pixar's films and associated projects as an executive producer. He also personally directed Toy Story, A Bug's Life, Toy Story 2, Cars and Cars 2.

He has won two Academy Awards, for Animated Short Film (Tin Toy), as well as a Special Achievement Award (Toy Story). Lasseter has been nominated on four other occasions -- in the category of Animated Feature, for both Cars(2006) and Monsters, Inc. (2001), in the Original Screenplay category for Toy Story (1995) and in the Animated Short category for Luxo, Jr. (1986).

Figure 3: John Lasseter discussing Toy Story.

Lasseter is a founding member of Pixar, where he oversees all of Pixar's films and associated projects as an executive producer. He also personally directed Toy Story, A Bug's Life, Toy Story 2, Cars and Cars 2.

He has won two Academy Awards, for Animated Short Film (Tin Toy), as well as a Special Achievement Award (Toy Story). Lasseter has been nominated on four other occasions -- in the category of Animated Feature, for both Cars(2006) and Monsters, Inc. (2001), in the Original Screenplay category for Toy Story (1995) and in the Animated Short category for Luxo, Jr. (1986).

1. Mori, L. (2015).The Evolution of 3G Software.[Web log post] Retrieved from http://www.3dartistonline.com/news/2013/12/the-evolution-of-cg-software/

2. EVLTube (2007). Making of the Computer Graphics for Star Wars (Episode IV) [Video file] Retrieved from https://www.youtube.com/watch?v=yMeSw00n3Ac

3. Slick, J (2015). 10 Pioneers in 3D Graphics. [Web log post] Retrieved from http://3d.about.com/od/3d-101-The-Basics/tp/10-Pioneers-In-3d-Computer-Graphics.htm

4. Design.Osu.edu (2015). An Historical Timeline of Computer Graphics and Animation.[Web log post] Retrieved https://design.osu.edu/carlson/history/timeline.html

5. Frost, J (2015).The Disney Blog. [Web log post] Retrieved http://thedisneyblog.com/tag/john-lasseter/

6. Skimbako (2011). John Lasseter Explains the Back Story of Disney Pixar CARS 2. [Video file] Retrieved from https://www.youtube.com/watch?v=4bWtK4c-gEw

7. Wikia.com (2015). John Lasseter. [Web log post] Retrieved http://pixar.wikia.com/wiki/John_Lasseter

8. Grover, Ronald (March 10, 2006). "The Happiest Place on Earth – Again". Bloomberg Businessweek. Retrieved October, 2015.

9. Huffpost (2015). [Image File] Retrieved from http://i.huffpost.com/gen/294767/images/r-CARS-2-BIG-OIL-large570.jpg

10.Serrano, A. (2014, November 7). Big Hero 6 Opens Today!! [Web log post]. Retrieved from http://armandserrano.blogspot.com.au/2014/11/big-hero-6-opens-today.html

11. Animation Arena (2012). Introduction to 3D Modeling. Retrieved October 1, 2015, from http://www.animationarena.com/introduction-to-3d-modeling.html

12.hghlander_72 (2008). Maya Screenshot [image]. Autodesk Forums. Retrieved from http://forums.autodesk.com/t5/gallery-work-in-progress/cgtalk-animals-in-repose-workshop-kirin-japanese-unicorn/td-p/4017660

13. Conner, Paul (2015). Modeling and Organized Head Mesh in Maya [image]. Digital Tutors. Retrieved from. http://www.digitaltutors.com/tutorial/2266-Modeling-an-Organized-Head-Mesh-in-Maya.

14. Masters, Mark (2014). What's the Difference? A Comparison of Modeling for Games and Modeling for Movies. [Web log post]. Retrieved from http://blog.digitaltutors.com/whats-the-difference-a-comparison-of-modeling-for-games-and-modeling-for-movies/

15. Singh, R. R. (2011, November 23). Baking Ambient Occlusion, Color And Light Maps In Maya Using Mentalray. [Web log post]. Retrieved from http://cgi.tutsplus.com/tutorials/baking-ambient-occlusion-color-and-light-maps-in-maya-using-mentalray--cg-11872.

16. Ward, A (2013, December 9). How to texture a model in Maya. [Web log post]. Retrieved from http://www.creativebloq.com/3d/how-texture-model-maya-121310050

17. Taylor, James (2014, November 12). Maya bodybuilder CHARACTER MODELING tutorial. [Video File]. Retrieved from https://www.youtube.com/embed/spi4lGxnMZg

18. Taylor, James (2014, December 16). UV MAPPING MADE EASY! UV unwrapping tutorial for Maya 2015 / 2016 / LT 2016. [Video File]. Retrieved fromhttps://www.youtube.com/embed/HLhazEa8wmw

19. Ward, A (2014, November 19). How to texture a model in Maya. [Video File]. Retrieved from https://www.youtube.com/embed/L0Au_i2qMuQ

20. Ward, A (2014, November 19). How to texture a model in Maya.[Video File]. Retrieved from https://www.youtube.com/embed/LG0EvddoKfk

21. Ward, A (2014, November 19). How to texture a model in Maya.[Video File]. Retrieved from https://www.youtube.com/embed/GOyZtHD5cks

22.Ward, A (2014, November 19). How to texture a model in Maya.[Video File]. Retrieved from https://www.youtube.com/embed/rFTTdurc48M

23. Ward, A (2014, November 19). How to texture a model in Maya.[Video File]. Retrieved from https://www.youtube.com/embed/0uSRbNPrv0U

24. Ward, A (2014, November 19). How to texture a model in Maya.[Video File]. Retrieved from https://www.youtube.com/embed/YS6RkiIb6Nc

25. Ward, A (2014, November 19). How to texture a model in Maya.[Video File]. Retrieved from https://www.youtube.com/embed/VQTewce2cSo

26. Ward, A (2014, November 19). How to texture a model in Maya.[Video File]. Retrieved from https://www.youtube.com/embed/4ia5i5Z4CyQ

27. Ward, A (2014, November 19). How to texture a model in Maya.[Video File]. Retrieved from https://www.youtube.com/embed/nH8TnL-AUO8

28. Ward, A (2014, November 19). How to texture a model in Maya.[Video File]. Retrieved from https://www.youtube.com/embed/BpkvdTkIlTs

29. Ward, A (2014, November 19). How to texture a model in Maya.[Video File]. Retrieved from https://www.youtube.com/embed/F8L3MKeYbJ4

30.Ward, A (2014, November 19). How to texture a model in Maya.[Video File]. Retrieved from https://www.youtube.com/embed/aqwrgoNWLCw

31. Ward, A (2014, November 19). How to texture a model in Maya.[Video File]. Retrieved from https://www.youtube.com/embed/8754C98gcIA

32. Capttrob (2007, August 21). Lock on Axis of Object [Image]. Retrieved from http://www.digitaltutors.com/forum/printthread.php?t=5232

33. Foofy 69 (2013, October 13). How to make UV Maps [Image]. Retrieved from http://forum.unity3d.com/attachments/soldier-jpg.84066/

34. Slick, J. (2015). What is Rigging? [Web log post]. Retrieved from http://3d.about.com/od/Creating-3D-The-CG-Pipeline/a/What-Is-Rigging.htm

35. Animation Arena (2012). Introduction to 3D Animation. Retrieved October 10, 2015, from http://www.animationarena.com/introduction-to-3d-animation.html

36. Oreilly Video Training (2011, August 13). Maya 2012 Tutorial - Keyframe Animation Fundamentals.[Video File]. Retrieved from

37. Full Sail University (2013, May 29). What is Motion Capture? [Video File]. Retrieved from

11. Animation Arena (2012). Introduction to 3D Modeling. Retrieved October 1, 2015, from http://www.animationarena.com/introduction-to-3d-modeling.html

No comments :

Post a Comment